Working Paper Series no. 809: Return on Investment on AI: The Case of Capital Requirement

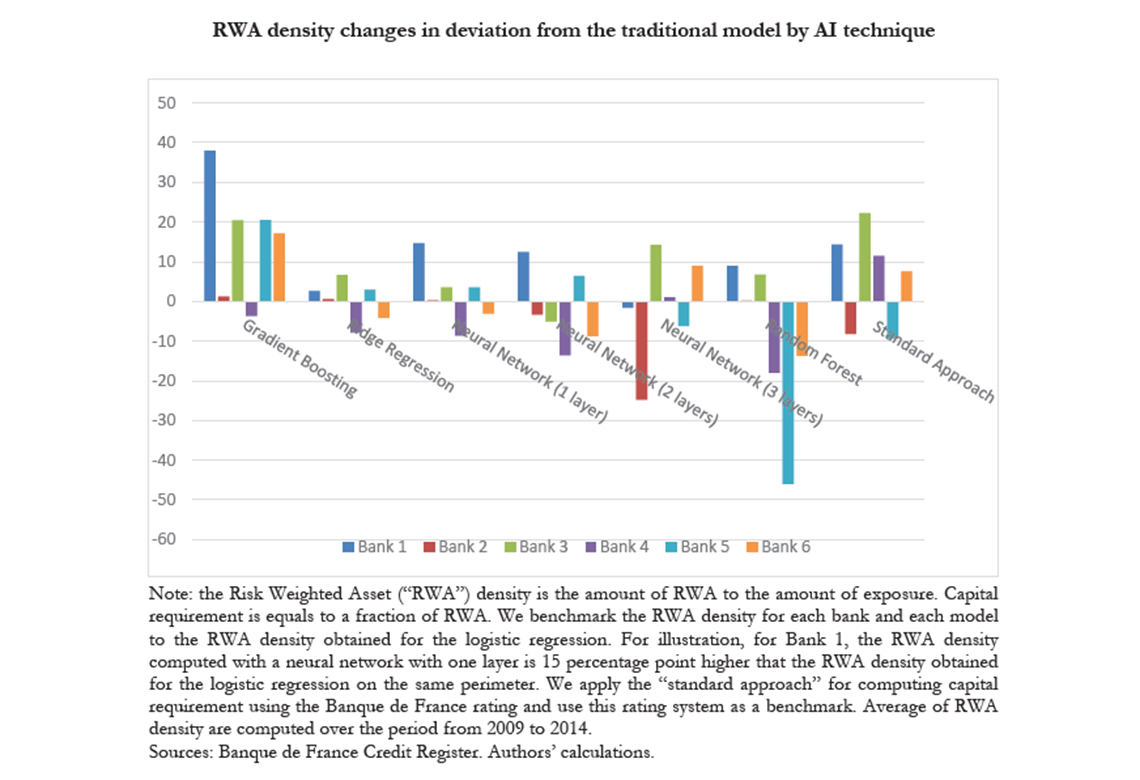

Taking advantage of granular data we measure the change in bank capital requirement resulting from the implementation of AI techniques to predict corporate defaults. For each of the largest banks operating in France we design an algorithm to build pseudo-internal models of credit risk management for a range of methodologies extensively used in AI (random forest, gradient boosting, ridge regression, deep learning). We compare these models to the traditional model usually in place that basically relies on a combination of logistic regression and expert judgement. The comparison is made along two sets of criterias capturing : the ability to pass compliance tests used by the regulators during on-site missions of model validation (i), and the induced changes in capital requirement (ii). The different models show noticeable differences in their ability to pass the regulatory tests and to lead to a reduction in capital requirement. While displaying a similar ability than the traditional model to pass compliance tests, neural networks provide the strongest incentive for banks to apply AI models for their internal model of credit risk of corporate businesses as they lead in some cases to sizeable reduction in capital requirement.[1]

Over the recent years, the opportunity offered by Artifical Intelligence (“AI” hereafter) for optimizing processes in the financial services industry has been subject to considerable attention. Banks have been using statistical models for managing their risk for years. Following the Basel II accords signed in 2004, they have the possibility to use these internal models to estimate their own funds requirements – i.e. the minimum amount of capital they must hold by law – provided they have prior authorisation from their supervisor (the “advanced approach”). In France, banks elaborated their internal models in the years preceeding their actual validation –mostly in 2008- at a time when traditional techniques were prevailing and AI techniques could not been implemented or were not considered.

In this paper, taking advantage of granular data we measure to which extent banks can lower their capital requirement by the use of AI techniques under the constraint to get their internal models approved by the supervisor. We set up a traditional model for each of the major banking groups operating in France in the corporate loans market. This traditional model – based on on a combination of logistic regression and expert judgemen– aims to replicate the models described in the regulatory validation reports and put in place by banks for predicting corporate defaults and computing capital requirement. On the same data, we then estimate pseudo “internal” models of corporate defauts using the four most extensively used in the AI field : neural networks, random forest, gradient boosting and penalized ridge regression.

We compare these models to the traditional model along two sets of criterias capturing : the ability to pass compliance tests used by the regulators during on-site missions of model validation (i), and the induced changes in capital requirement (ii). The different models show noticeable differences in their ability to pass the regulatory tests and to lead to a reduction in capital requirement. Prone to overfitting, the random forest methodology fails the compliance tests. The gradient boosting methodology leads to capital charge higher than the one expected by regulators when no model is in place. While displaying a similar ability than the traditional model to pass compliance tests, neural networks provide in some cases strong incentive for banks to apply AI models for their internal model of credit risk of corporate businesses as they lead to sizeable reduction in capital requirement.

Download the PDF version of this document

- Published on 03/11/2021

- 37 pages

- EN

- PDF (3.14 MB)

Updated on: 03/11/2021 17:11